Back in 2017, a New York-based iced tea company said it was “pivoting to the blockchain,” and its stock jumped by 200%.

I bring this up because, six years later, AI is as buzzy today as the blockchain was then. Marketing departments have co-opted the term to the point that what ‘AI’ means—and more importantly, how it can really create stronger cybersecurity—can get lost in the noise.

That was even clear to me at the KuppingerCole European Identity and Cloud Conference 2023, where I presented on AI’s potential to prevent account takeover. There’s more to AI than just the hype, but its real value in creating stronger cybersecurity and moving organizations closer to zero trust can get drowned out by all the buzz.

So, let’s define our terms and get into the weeds. Let’s review some of the ways cybersecurity can really use AI to process authentication, entitlement, and usage data. And let’s define the type of AI that can work best to improve an organization’s cybersecurity stance.

Cybersecurity tends to be sub-divided into core competencies: multi-factor authentication (MFA) to secure access, identity governance and administration (IGA) to enforce least privilege, security incident and event management (SIEM) to monitor usage, etc., etc.

Each of those competencies are highly-specialized, deploy their own tools, and protect against different risks. What they all have in common is that they all produce mountains of data.

Look at identity, which is adding more users, devices, entitlements, and environments than ever. In a 2021 survey, more than 80% of respondents said that the number of identities they managed had more than doubled, and 25% reported a 10X increase.

Identity is quickly becoming a data problem: there’s more information than humans can handle. And that’s why AI can be such an important asset: AI can make sense of large amounts of data quickly if you frame the question correctly and know what you should ask AI.

Here are the three questions that cybersecurity professionals can use to prevent risks and detect threats:

#1. Authentication: use AI to understand who is trying to get in

AI can process authentication data to assess who is trying to authenticate into your system. It does that by looking at every user’s context, including the device that they’re using, the time that they’re trying to access it, the location they’re accessing it from, and more.

The next step is to use that current information and compare it with the past behavior of that particular user: if I authenticate from the same laptop, at the same hours, and from the same IP address this week as I did last week, then my context will probably look pretty good.

Alternatively, if someone claiming to be me is trying to log in from a new device at 3 AM and from a new IP address, then AI should automate step-up authentication to challenge that suspicious behavior.

Importantly, those decisions about what makes for ‘good context’ and ‘bad context’ aren’t static: instead, the AI should constantly re-evaluate its decisions to reflect users’ and organizations’ overall behavior and adapt to whatever ‘normal’ looks like. User behavior always changes and the AI always needs to take that into account.

Static rule sets aren’t fine-grained enough to account for individual context and lack the depth of reference data needed to automate actions with confidence.

Or, to put it another way: static rule sets won’t be able to know whether it’s normal for me—specifically me—to try to sign-in for the 8th time within 60 minutes from Germany at 23:00. Maybe that’s normal behavior for me, or maybe it’s fishy. Either way, static rulesets won’t be able to tell.

For nearly 20 years, RSA has been using machine learning algorithms and behavioral analytics to help customers define what ‘good’ and ‘bad’ context looks like and automate responses to users’ behaviors. RSA Risk AI processes the vast amount of data users generate to complement traditional authentication and access techniques and help businesses make smarter, faster, and safer access decisions at scale.

#2 Account and Entitlement: use AI to learn what someone could access

AI processes authentication data to find out who is trying to gain access. It reviews account and authorization data to answer a different question: what could someone access?

Bots answer this by looking at accounts and entitlements for various applications. Doing this helps organizations move to least privilege (an important building block of zero trust). Doing so can also help organizations identify any segregation of duties violations.

For humans, processing account and entitlement information would be next to impossible: entitlement records can quickly reach into the millions. Reviewing that data manually is bound to fail—a human reviewer would likely hit “Approve All” and be done with it.

But while conducting a thorough entitlement review is high-effort, it’s also high-value—particularly in developing a stronger cybersecurity stance. Because humans are so quick to hit the “Approve All” button, we’re creating accounts that have far more entitlements than they need: only 2% of entitlements are used.

Those entitlement risk scale as organizations integrate more cloud environments: Gartner predicts that the “inadequate management of identities, access and privileges will cause 75% of cloud security failures” this year, and that half of enterprises will mistakenly expose some of their resources directly to the public.

Organizations can find valuable insights—like outlier users—by searching through their entitlement data. Outlier users look very similar to other sets of users but have some combinations of entitlements that make them different. Those differences may not be as obvious as a segregation of duties violations, but still may be significant enough for the AI to recognize them. An access review would focus on those outlier users—and not the other 99% of users whose entitlements are considered lower risk or that have had the same entitlements approved previously.

Finding those small needles in all those towering haystacks is easy for AI and all but impossible for humans.

#3. Application Usage: Use AI to learn what someone is really doing

AI looks at authentication data to determine who is trying to gain access. It looks at entitlement data to understand what someone could access.

When it comes to application usage data, AI is trying to answer what someone actually does.

There’s a constant stream of incredibly useful real-time data across all applications and components of an organization’s infrastructure: an AI could see what resources I really used to write this blog post, who I went to for help, the data I consulted, the apps I used, and so on. That tells my organization about what I’m doing and the steps I really needed to complete it.

Importantly, that analysis can also reveal incorrect, illegal, out of compliance, or risky activity: just because a user legitimately has an entitlement doesn’t mean that they should. AI can process application usage data to find those mistakes and address them. Sure, you have the entitlements to that sensitive SharePoint site, but why are you downloading a large amount of files from it during the last 30 minutes?

There are two major types of AI: deterministic and non-deterministic. Machine Learning is mostly deterministic AI. It’s best at processing structured data. Deep Learning is often a type of non-deterministic AI that’s best at processing unstructured data.

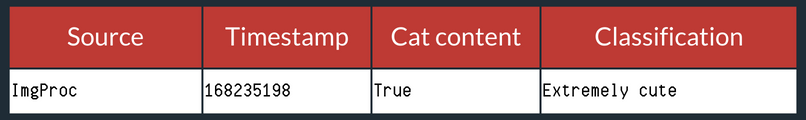

Another way of putting it: Deep Learning would be able to look at this image and answer “Where is the cat in the picture?”

Machine Learning would be able to look at a log file and answer “What happened at Timestamp 168235198?”

In security, whether you’re trying to use AI to assess authentication, entitlement, or application usage data, the information that we’re looking at is almost exclusively structured data.

That means that, largely, we want to use Machine Learning, or deterministic AI, in cybersecurity. Deterministic AI can handle the inputs we feed it more transparently than non-deterministic AI. “More transparently” because, let’s face it: not all of us (me included) have the advanced math knowledge to know all the details. However, many people do, and a deterministic ML model can be explained to them in its entirety.

There is research happening to get a greater understanding how non-deterministic AIs work. I am curious where this leads and if we will end up understanding completely how neural networks and other non-deterministic AIs actually work.

Just as important as handling those structured inputs are the outputs that deterministic AI produces. Non-deterministic AI is more of a black box: we humans can’t be exactly sure how a non-deterministic AI produced a given image, for example. There is the input at the beginning, the output at the ending, and if you would open that black box in the middle you would see a fantasy land of dragons and unicorns: it simply is a mystery.

With deterministic AI, we can know how our model arrived at its answer. Theoretically, we could check a deterministic AI’s work and manually plug the same inputs in to arrive at the same answer. It’s just doing that manually would require an unholy amount of notepaper, coffee, and sanity and it cannot be done in real-time

For security and audit teams, having that transparency and understanding how your AI is working are critical to maintaining compliance and applying for certifications.

That’s not to say that there’s no role for non-deterministic / deep learning algorithms in cybersecurity. There are: deep learning may find answers that we didn’t search for and can be used to improve deterministic models. Cybersecurity professionals would need to put a significant amount of trust in that black box—and what it produces.

We’re betting big on AI and think that it can have a significant role in creating stronger cybersecurity.

But just as important as AI is having all your identity components work together: authentication, access, governance, and lifecycle need to collaborate to protect the entire identity lifecycle.

Combining these functions into a unified identity platform helps organizations protect the blind spots that result from point solutions; it also creates more data inputs to train AI into something even smarter.